In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimated from the data.

univariate linear regression

Univariate linear regression is also called single linear regression.

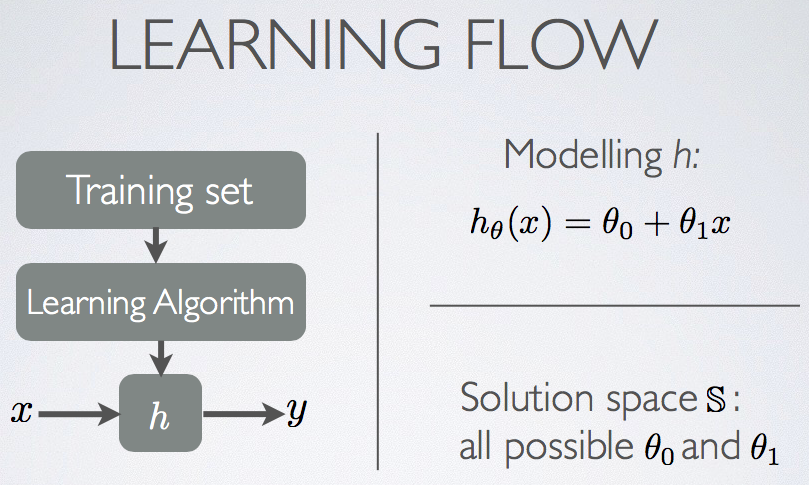

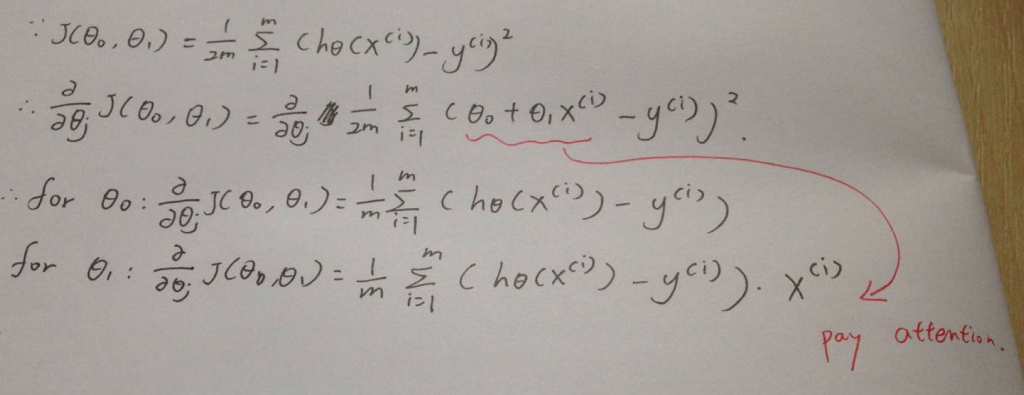

Given a model h with solution space S and a training set {X, Y}, a learning algorithm finds the solution which minimizes the cost function J(S). And there is a question: how to choose θ? We need to find θ0 and θ1 so that h(x) is close to y for our training set{X, Y}.

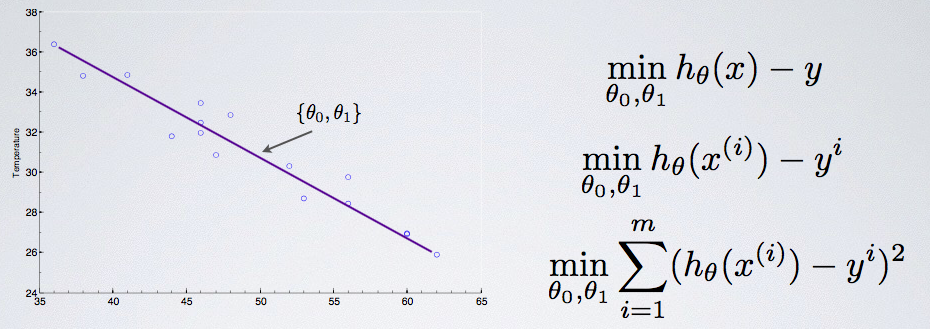

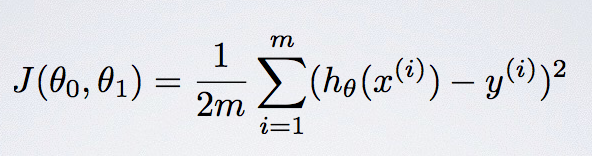

First of all, we need to find the min for the difference between hθ(x)-y, then that is to say for every x and y for the h(x). So it’s the third equation. And the reason for why we square it is the difference could be a negative number, so square it can ensure it must be a positive number. Then we divided it by 2m, the reason for m is to remove the dependency of data set size, and the reason for 2 is for simplification of the derivative, because when you will try to minimize it, you will use the steepest descent method, which is based on the derivative of this function. Derivative of a^2 is 2a, and our function is a square of something, so this 2 will cancel out.

Then we will have our cost function:

Gradient descent

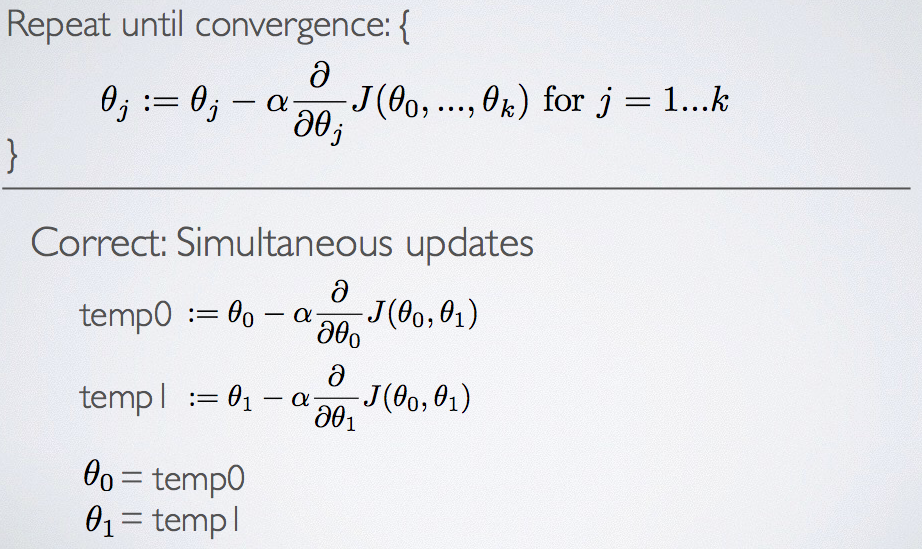

Given some cost function J, and start with some initial values for θ0…θk. Keep updating θ0…θk to reduce J(θ0, Jθ1,..,θk) hoping to end in the minimum(and know it).

Let’s look at the simultaneous updates, the order of the sequence cannot be changed because, if you move θ0 before the calculation of temp1, you will get a different temp1. Because θ0 will be used when calculating temp1. THe following calculation is going to explain it more clear.

Just a reminder that gradient descent for single variable and gradient descent for multiple variable is the same.