Recently, I am facing with a problem of how can I use my iPhone in Google Cardboard with Leap Motion? Unlike Oculus and other VR headsets, we cannot plug Leap Motion into iPhone. So the existing SDK of Unity built by LeapMotion cannot be used directly. In this tutorial, I am going to explain how to implement it.

The basic idea is I use Mac Laptop to support the Leap Motion and transfer the data through WIFI to iPhone. You need to know about socket communication in C# and some basic programming knowledge with Unity.

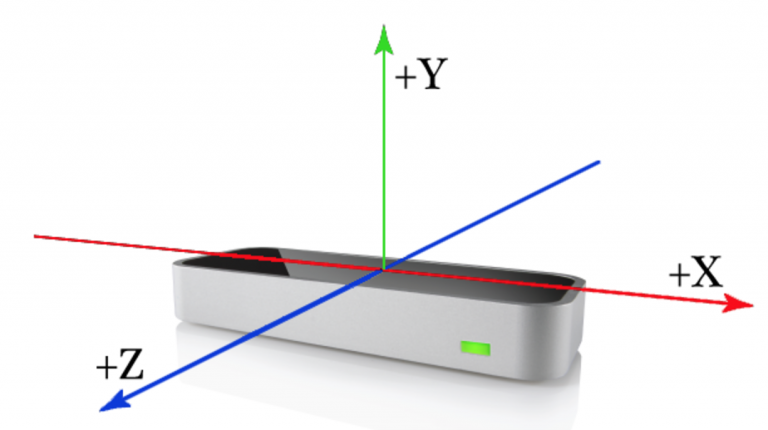

The first thing you need to do is to set up a C# server to receive Leap Motion’s hand information on your Laptop. In the Leap Motion official website Leap Motion C# SDK, you can see what the kind of hand information we can collect. Keep this in mind The Leap Motion system employs a right-handed Cartesian coordinate system. The origin is centered at the top of the Leap Motion Controller.

Server part:

So firstly, you need to try some examples with this C# SDK and then make some change to let these code work as a socket server. The way I used to do is to declare these variables at the top:

And in the public override void OnInit (Controller controller) function, we start our server by:

Leap Motion has public override void OnFrame(Controller controller) which runs all the time to update the latest hand information. So I write the following code to send data to the client which is linked with this server:

You can set any information as textinput, in this example, I am going to recognize the gestures clockwise and counterclockwise.

Now, you finished all the work for server.

Client part:

It is a unity project, so in the start function, we need to connect this project to the server:

If we don’t use thread here, you project will be easily stuck because it is always waiting for new messages from the server. GetInformation is the function which you use for receiving the data from the server:

Because we can not update the UI interface in the thread in Unity, and we need to invoke functions on the main Thread. So I use the simple trick to update the interface. Set boolean variable getnewinfo, and in the Unity’s default void Update(), write an if-statement to control the implementation of UI interface changing. 🙂

Build your project and install it to your iPhone

Now, you can build your project and install it to iPhone. For better experience, I recommend using Vuforia’s AR Camera. With that, you can see the real world and your hand rather than the default sky in Unity. 🙂

Further implementation and questions:

The detailed information of fingers are also collected by LeapMotion C# SDK, you can send it to your phone and create new features like holding some virtual things on your hand.

If you have any further questions, please leave a comment below. I am going to upload these sample code on Github after April. Thanks for reading.